Prerequisites:

Git

Jenkins

Sonar-Scanner

Snyk

Java, Maven, Node.js, Python, etc. (The language you select for your project will rely on which installation requirements apply.)

Docker

Aqua Trivy

Kubernetes

Zaproxy

Ansible

Jenkinsfile(Groovy Script)

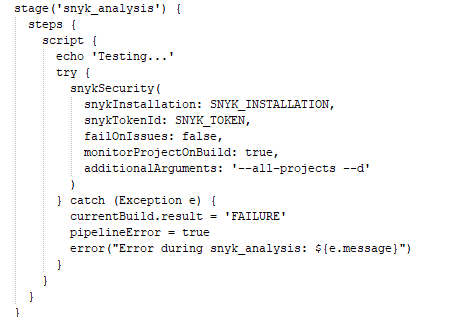

// Define the detectJavaVersion function outside of the pipeline block

def detectJavaVersion() {

def javaVersionOutput = sh(script: 'java -version 2>&1', returnStatus: false, returnStdout: true).trim()

def javaVersionMatch = javaVersionOutput =~ /openjdk version "(\d+\.\d+)/

if (javaVersionMatch) {

def javaVersion = javaVersionMatch[0][1]

if (javaVersion.startsWith("1.8")) {

return '8'

} else if (javaVersion.startsWith("11")) {

return '11'

} else if (javaVersion.startsWith("17")) {

return '17'

} else {

error("Unsupported Java version detected: ${javaVersion}")

}

} else {

error("Java version information not found in output.")

}

}

pipeline {

agent any

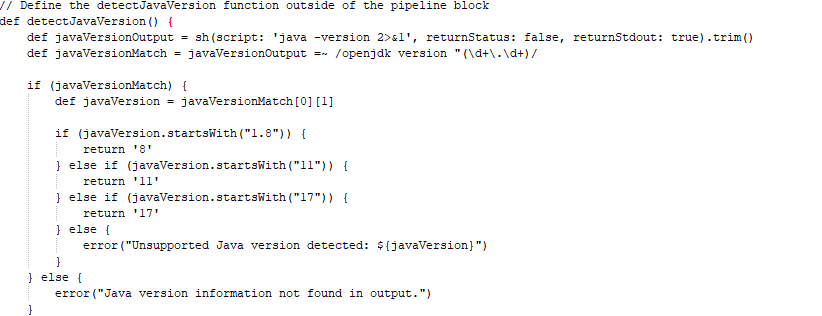

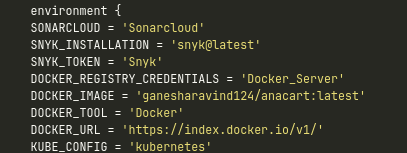

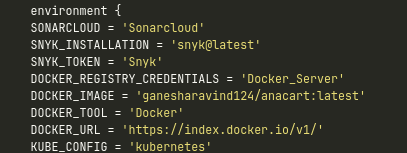

environment {

SONARCLOUD = 'Sonarcloud'

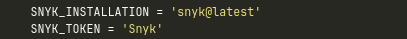

SNYK_INSTALLATION = 'snyk@latest'

SNYK_TOKEN = 'Snyk'

DOCKER_REGISTRY_CREDENTIALS = 'Docker_Server'

DOCKER_IMAGE = 'redhaanggara/anacart:latest'

DOCKER_TOOL = 'Docker'

DOCKER_URL = 'https://index.docker.io/v1/'

KUBE_CONFIG = 'kubernetes'

}

stages {

stage('Clean Workspace') {

steps {

cleanWs()

}

}

stage('Git-Checkout') {

steps {

checkout scm

}

}

// /opt/sonar-scanner-5.0.1.3006-linux/bin/sonar-scanner

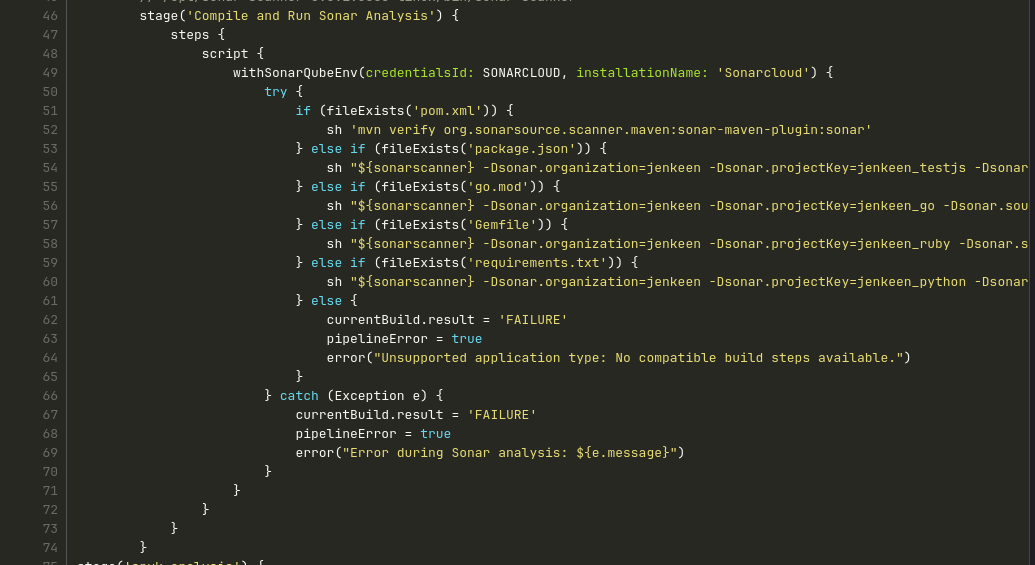

stage('Compile and Run Sonar Analysis') {

steps {

script {

withSonarQubeEnv(credentialsId: SONARCLOUD, installationName: 'Sonarcloud') {

try {

if (fileExists('pom.xml')) {

sh 'mvn verify org.sonarsource.scanner.maven:sonar-maven-plugin:sonar'

} else if (fileExists('package.json')) {

sh "${sonarscanner} -Dsonar.organization=jenkeen -Dsonar.projectKey=jenkeen_testjs -Dsonar.sources=. -Dsonar.host.url=https://sonarcloud.io -Dsonar.login=b8c55c159b1fd559baaccf9bee42344faed0a7b4"

} else if (fileExists('go.mod')) {

sh "${sonarscanner} -Dsonar.organization=jenkeen -Dsonar.projectKey=jenkeen_go -Dsonar.sources=. -Dsonar.host.url=https://sonarcloud.io -Dsonar.login=b8c55c159b1fd559baaccf9bee42344faed0a7b4"

} else if (fileExists('Gemfile')) {

sh "${sonarscanner} -Dsonar.organization=jenkeen -Dsonar.projectKey=jenkeen_ruby -Dsonar.sources=. -Dsonar.host.url=https://sonarcloud.io -Dsonar.login=b8c55c159b1fd559baaccf9bee42344faed0a7b4"

} else if (fileExists('requirements.txt')) {

sh "${sonarscanner} -Dsonar.organization=jenkeen -Dsonar.projectKey=jenkeen_python -Dsonar.sources=. -Dsonar.host.url=https://sonarcloud.io -Dsonar.login=b8c55c159b1fd559baaccf9bee42344faed0a7b4"

} else {

currentBuild.result = 'FAILURE'

pipelineError = true

error("Unsupported application type: No compatible build steps available.")

}

} catch (Exception e) {

currentBuild.result = 'FAILURE'

pipelineError = true

error("Error during Sonar analysis: ${e.message}")

}

}

}

}

}

stage('snyk_analysis') {

steps {

script {

echo 'Testing...'

try {

snykSecurity(

snykInstallation: SNYK_INSTALLATION,

snykTokenId: SNYK_TOKEN,

failOnIssues: false,

monitorProjectOnBuild: true,

additionalArguments: '--all-projects --d'

)

} catch (Exception e) {

currentBuild.result = 'FAILURE'

pipelineError = true

error("Error during snyk_analysis: ${e.message}")

}

}

}

}

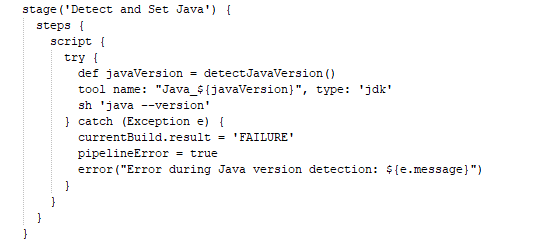

stage('Detect and Set Java') {

steps {

script {

try {

def javaVersion = detectJavaVersion()

tool name: "Java_${javaVersion}", type: 'jdk'

sh 'java --version'

} catch (Exception e) {

currentBuild.result = 'FAILURE'

pipelineError = true

error("Error during Java version detection: ${e.message}")

}

}

}

}

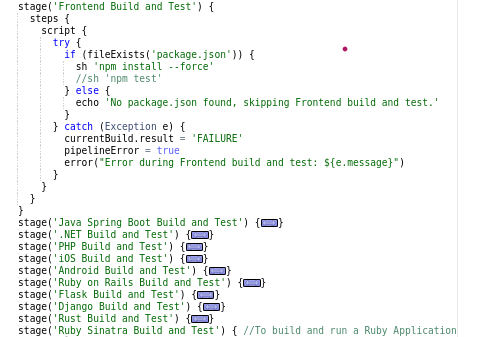

stage('Frontend Build and Test') {

steps {

script {

try {

if (fileExists('package.json')) {

//sh 'npm install --force'

//sh 'npm test'

} else {

echo 'No package.json found, skipping Frontend build and test.'

}

} catch (Exception e) {

currentBuild.result = 'FAILURE'

pipelineError = true

error("Error during Frontend build and test: ${e.message}")

}

}

}

}

stage('Java Spring Boot Build and Test') {

steps {

script {

try {

if (fileExists('pom.xml')) {

sh 'mvn clean package'

sh 'mvn test'

} else {

// If pom.xml doesn't exist, print a message and continue

echo 'No pom.xml found, skipping Java Spring Boot build and test.'

}

} catch (Exception e) {

currentBuild.result = 'FAILURE'

error("Error during Java Spring Boot build and test: ${e.message}")

}

}

}

}

stage('.NET Build and Test') {

steps {

script {

try {

if (fileExists('YourSolution.sln')) {

sh 'dotnet build'

sh 'dotnet test'

} else {

// If YourSolution.sln doesn't exist, print a message and continue

echo 'No YourSolution.sln found, skipping .NET build and test.'

}

} catch (Exception e) {

currentBuild.result = 'FAILURE'

error("Error during .NET build and test: ${e.message}")

}

}

}

}

stage('PHP Build and Test') {

steps {

script {

try {

if (fileExists('composer.json')) {

sh 'composer install'

sh 'phpunit'

} else {

// If composer.json doesn't exist, print a message and continue

echo 'No composer.json found, skipping PHP build and test.'

}

} catch (Exception e) {

currentBuild.result = 'FAILURE'

error("Error during PHP build and test: ${e.message}")

}

}

}

}

stage('iOS Build and Test') {

steps {

script {

try {

if (fileExists('YourProject.xcodeproj')) {

xcodebuild(buildDir: 'build', scheme: 'YourScheme')

} else {

// If YourProject.xcodeproj doesn't exist, print a message and continue

echo 'No YourProject.xcodeproj found, skipping iOS build and test.'

}

} catch (Exception e) {

currentBuild.result = 'FAILURE'

error("Error during iOS build and test: ${e.message}")

}

}

}

}

stage('Android Build and Test') {

steps {

script {

try {

if (fileExists('build.gradle')) {

sh './gradlew build'

sh './gradlew test'

} else {

// If build.gradle doesn't exist, print a message and continue

echo 'No build.gradle found, skipping Android build and test.'

}

} catch (Exception e) {

currentBuild.result = 'FAILURE'

error("Error during Android build and test: ${e.message}")

}

}

}

}

stage('Ruby on Rails Build and Test') {

steps {

script {

try {

// Check if Gemfile.lock exists

if (fileExists('Gemfile.lock')) {

sh 'bundle install' // Install Ruby gem dependencies

sh 'bundle exec rake db:migrate' // Run database migrations

sh 'bundle exec rails test' // Run Rails tests (adjust as needed)

} else {

// If Gemfile.lock doesn't exist, print a message and continue

echo 'No Gemfile.lock found, skipping Ruby on Rails build and test.'

}

} catch (Exception e) {

currentBuild.result = 'FAILURE'

error("Error during Ruby on Rails build and test: ${e.message}")

}

}

}

}

stage('Flask Build and Test') { // To build and run a Python Flask Framework Application

steps {

script {

try {

if (fileExists('app.py')) {

sh 'pip install -r requirements.txt' // Install dependencies

sh 'python -m unittest discover' // Run Flask unit tests

} else {

// If app.py doesn't exist, print a message and continue

echo 'No app.py found, skipping Flask build and test.'

}

} catch (Exception e) {

currentBuild.result = 'FAILURE'

error("Error during Flask build and test: ${e.message}")

}

}

}

}

stage('Django Build and Test') { // To build and run a Python Django Framework Application

steps {

script {

try {

if (fileExists('manage.py')) {

sh 'pip install -r requirements.txt' // Install dependencies

sh 'python manage.py migrate' // Run Django migrations

sh 'python manage.py test' // Run Django tests

} else {

// If manage.py doesn't exist, print a message and continue

echo 'No manage.py found, skipping Django build and test.'

}

} catch (Exception e) {

currentBuild.result = 'FAILURE'

error("Error during Django build and test: ${e.message}")

}

}

}

}

stage('Rust Build and Test') { //To build and run a Rust Application

steps {

script {

try {

if (fileExists('Cargo.toml')) { // Check if Cargo.toml file exists

env.RUST_BACKTRACE = 'full' // Set the RUST_BACKTRACE environment variable to full for better error messages

sh 'cargo build' // Build the Rust project

sh 'cargo test' // Run the Rust tests

} else {

// If Cargo.toml doesn't exist, print a message and continue

echo "No Cargo.toml file found. Skipping Rust build and test."

}

} catch (Exception e) {

// Set the build result to FAILURE and print an error message

currentBuild.result = 'FAILURE'

error("Error during Rust build and test: ${e.message}")

}

}

}

}

stage('Ruby Sinatra Build and Test') { //To build and run a Ruby Application

steps {

script {

try {

if (fileExists('app.rb')) { // Check if app.rb file exists

sh 'gem install bundler' // Install Bundler

sh 'bundle install' // Use bundle exec to ensure gem dependencies are isolated

sh 'bundle exec rake test' // Run the Sinatra tests using Rake

} else {

// If app.rb doesn't exist, print a message and continue

echo "No app.rb file found. Skipping Ruby Sinatra build and test."

}

} catch (Exception e) {

// Set the build result to FAILURE and print an error message

currentBuild.result = 'FAILURE'

error("Error during Ruby Sinatra build and test: ${e.message}")

}

}

}

}

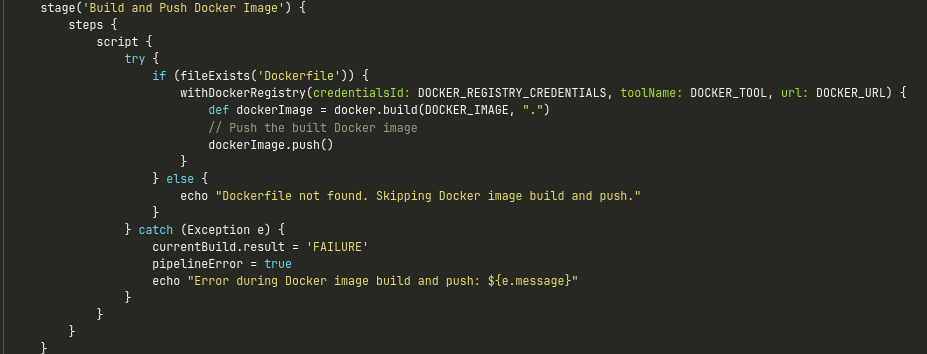

stage('Build and Push Docker Image') {

steps {

script {

try {

if (fileExists('Dockerfile')) {

withDockerRegistry(credentialsId: DOCKER_REGISTRY_CREDENTIALS, toolName: DOCKER_TOOL, url: DOCKER_URL) {

def dockerImage = docker.build(DOCKER_IMAGE, ".")

// Push the built Docker image

dockerImage.push()

}

} else {

echo "Dockerfile not found. Skipping Docker image build and push."

}

} catch (Exception e) {

currentBuild.result = 'FAILURE'

pipelineError = true

echo "Error during Docker image build and push: ${e.message}"

}

}

}

}

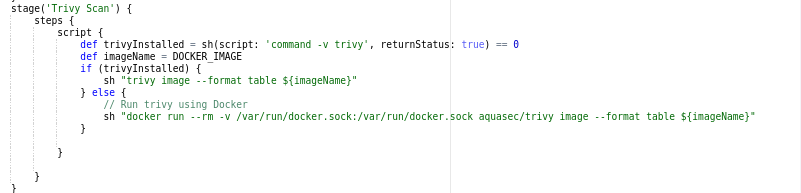

stage('Trivy Scan') {

steps {

script {

def trivyInstalled = sh(script: 'command -v trivy', returnStatus: true) == 0

def imageName = DOCKER_IMAGE

if (trivyInstalled) {

sh "trivy image --format table ${imageName}"

} else {

// Run trivy using Docker

sh "docker run --rm -v /var/run/docker.sock:/var/run/docker.sock aquasec/trivy image --format table ${imageName}"

}

}

}

}

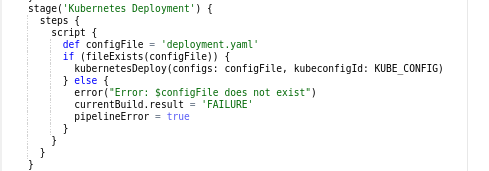

stage('Kubernetes Deployment') {

steps {

script {

def configFile = 'deployment.yaml'

def namespace = 'anacart' // Replace 'your-namespace' with your actual namespace

if (fileExists(configFile)) {

kubernetesDeploy(configs: configFile, kubeconfigId: KUBE_CONFIG, namespace: namespace)

} else {

error("Error: $configFile does not exist")

currentBuild.result = 'FAILURE'

pipelineError = true

}

}

}

}

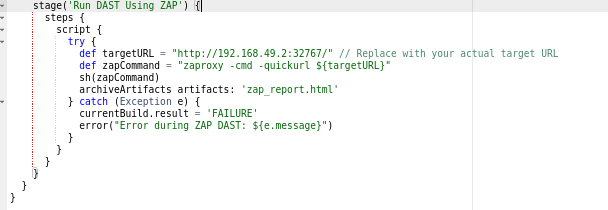

stage('Run DAST Using ZAP') {

steps {

script {

try {

def targetURL = "http://192.168.58.2:32765" // Use the obtained service URL as the target URL

def zapCommand = "zaproxy -cmd -quickurl ${targetURL}"

//sh(zapCommand)

sh("echo zap_report.html")

//archiveArtifacts artifacts: 'zap_report.html'

} catch (Exception e) {

currentBuild.result = 'FAILURE'

error("Error during ZAP DAST: ${e.message}")

}

}

}

}

}

}

Introduction:

In today’s fast-paced software development landscape, implementing efficient CI/CD pipelines is essential. This blog outlines the journey of building a robust CI/CD pipeline using Jenkins,integrating various tools to achieve seamless automation, security, and deployment for multi-language applications.

Setting the Stage:

This project involved orchestrating a CI/CD pipeline that encompassed Git, SonarCloud, Synk,multi-language build automation, Docker, Aqua Trivy, Ansible, Kubernetes, and ZAP Proxy.Leveraging Jenkins’ flexibility and Groovy scripting capabilities, I streamlined these tools into a cohesive pipeline.

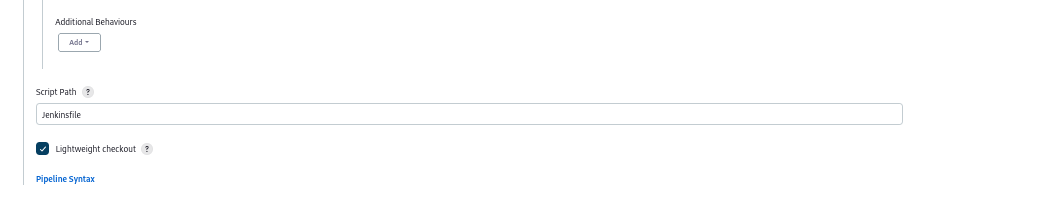

Pipeline Config:

Go to the configuration page in the pipeline job. This page will open. Add your Jenkins

pipeline script path there. There are two options available.

1. Script for pipeline: Here, you can easily write your own script.

2. Pipeline from SCM: it will use your SCM repository’s Jenkins file.

Here I am choosing the second option:

So, choose your SCM and give your branch and URL of your repository and also mention your Jenkinsfile in script path.

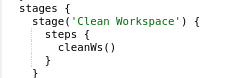

STAGE 1 (Cleanup Workspace)

In this stage, we going to cleanup our workspace where files and documents that are previously deployed before, after this stage done the git will pull newly updated files and run everything newly.

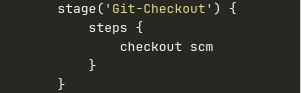

Stage 2 (Git Checkout)

Git:

We used a variety of source code management systems in our project, including GitHub,GitLab, AWS codecommit, and bitbucket, SVN, TFS, and others; however, I didn’t include that information in the flow chart.

Git Configure

Give your Git details in the SCM above; therefore, update those with the URL and branch names of your git details in from SCM.

Git Checkout

Note: If your git repository is private, you should give your Jenkins account your Gitlab personal access token or git credentials.

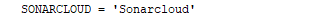

Stage 3 (Sonarcloud)

SonarCloud is used to perform SAST code Quality scans, so integrate it with Jenkins by adding a personal access token or authentication token. You may also call your Sonar scanner tool as sonar-scanner, or anything else you choose, and don’t forget to include it in your pipeline.

There are two options to run sonarcloud :

- Create sonar-project-properties file in your git repository and give the sonarcloud details

Here, adds your sonar-scanner path to the Jenkins pipeline script along with your pom.xml, csproj,solution file, package. Json, Gem file, requirement.txt, etc.

- You can directly mention your sonar cloud script in the Jenkins file.

compile and run sonar analysis

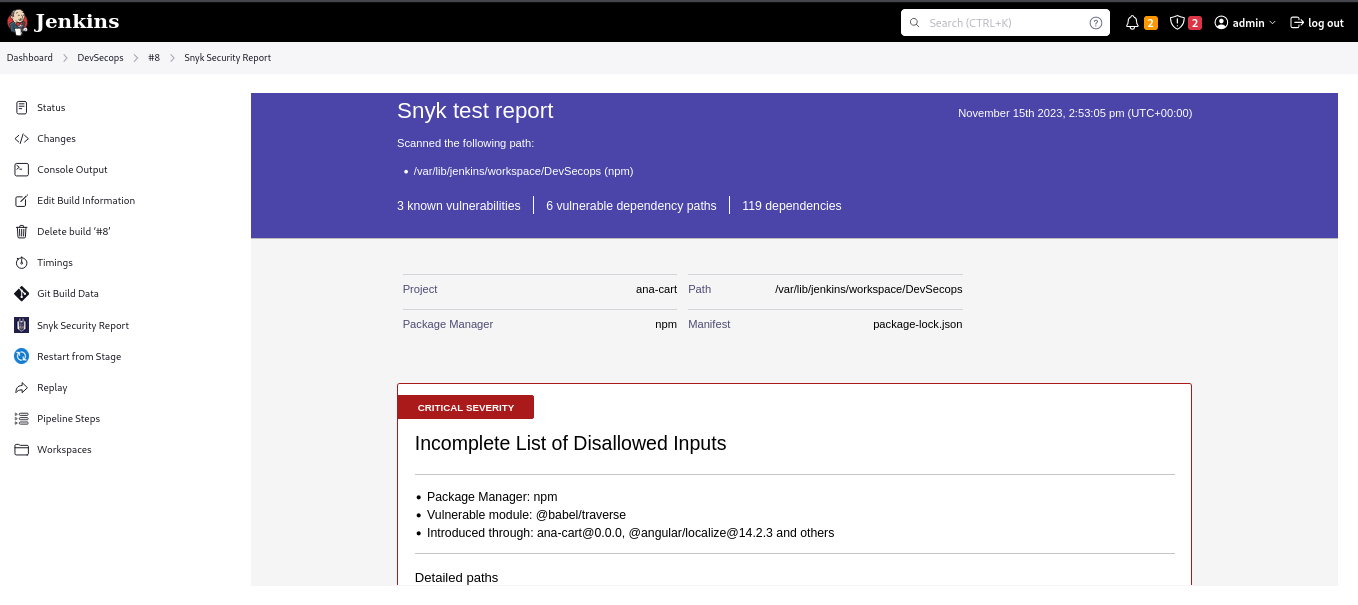

Stage 4 (Snyk)

Synk:

Synk is used to perform security vulnerability scans, so integrate it with Jenkins by giving it a personal access token or authentication token. You may also call your synk Installation tool as Snyk@latest, or anything else you choose, and don’t forget to include it in your pipeline.

In your pipeline, now mention your installation and the name of your Snyk token so that it knows which API you are attempting to access.

snyk analyisis

Stage 5 (Java Detection)

As I indicated earlier, Java may be automatically detected, and you will be able to see whether it issupported or not. therefore, make sure you have set up your JDK in Jenkins tools before doing it.

DetectJavaVersion

So here the java detects and set java pipeline script looks like:

Detect and Set Java

Stage 6 (Multi-Language Build and Deployment)

During this phase, I provided a variety of programming languages, including front-end, back-end, iOS, Android, Ruby, Flask, and so forth. Based on the languages I provided, the system will identify the source code from your repository and install, build, and execute the test in accordance with the pipeline script that we previously discussed.

Java, Maven, Node.js, Python, etc. (The language you select for your project will rely on which installation requirements apply.)Here, I’m using Node.js for my project.

Multi-lang build stages

You can see the pipeline script for the multi-lang build in the image above.

Stage 7 (Docker Build and Push)

At this Stage we are going to dockerize our project after building our source code. Our pipeline script will automatically identify whether a dockefile exists and generate the dockefile if not, else it will display the dockerfile not found.

Note: Make sure you specify the name of your Docker image correctly in the environment stage (the variable name will automatically identify and take the image name). The Dockerfile name is case-sensitive, add docker tool and docker API in your Jenkins.

Build and Push Docker Image

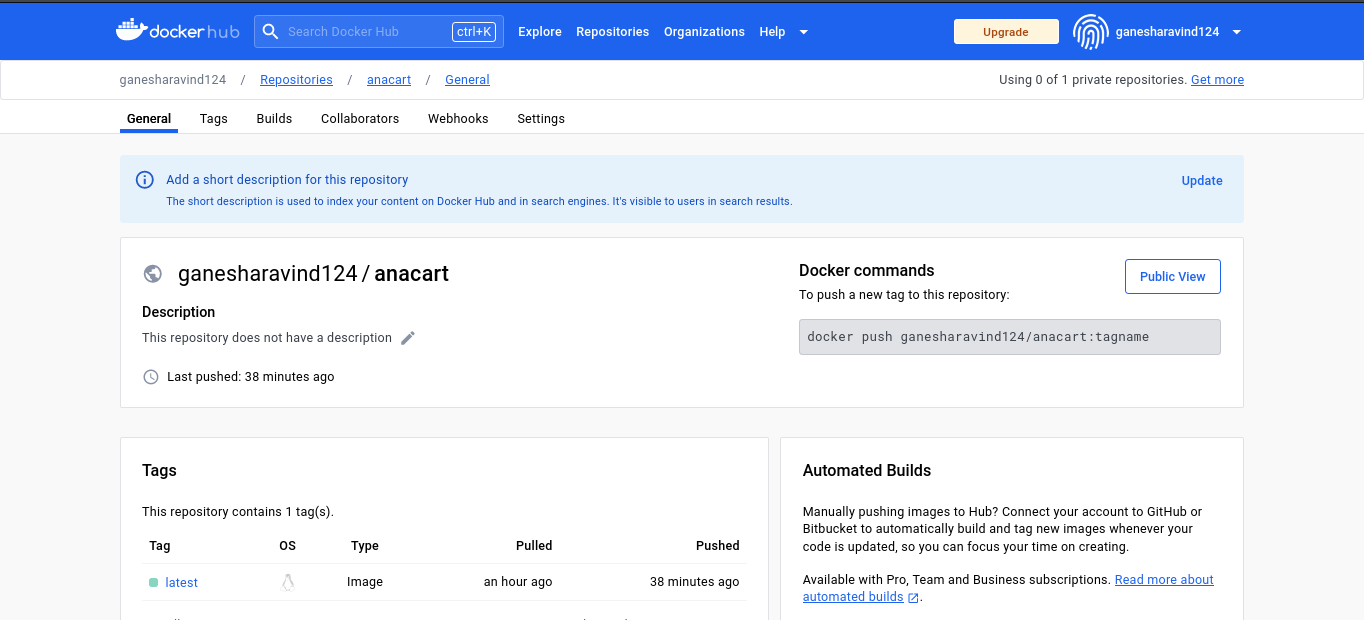

We will push and store our image on container registries such as Docker Hub, AWS ECR,GCP GCR, Harbour, and others at this stage. I used Docker Hub in this instance by supplying my credentials and indicating the Docker API that I am pushing to my hub repository. Don’t forget to set up a repository on Docker Hub before that.

To link with your container registries, make sure you provide your credentials or personal access token to Jenkins.Mention your credentials in the environment stage.

Environment

Note: By using the docker run command locally, you can verify whether your Docker image is up and running or not.

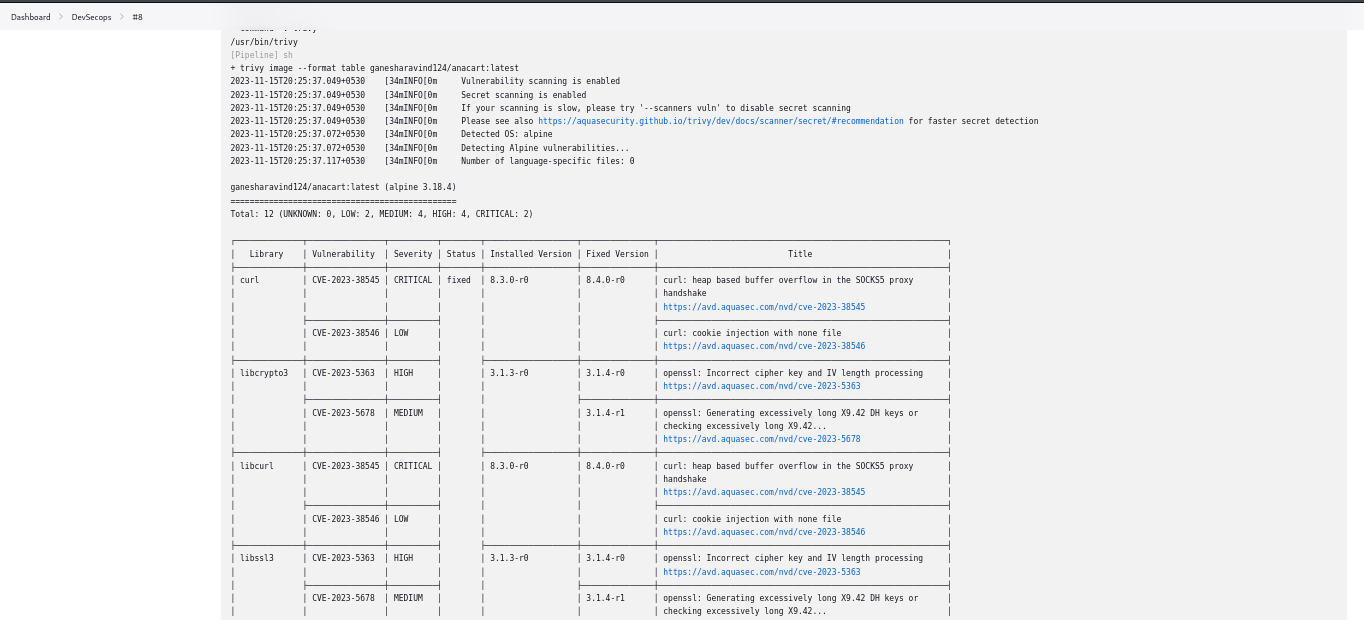

Stage 8 (Aqua Trivy Scan)

Now that the Docker build has finished and our image has been successfully produced, it’s time to detect any vulnerabilities by scanning it. We’re going to use Aqua Trivy Scan for image Scanning.

Verify that Aqua Trivy has been installed on your local system.If you don’t already have trivy installed on your system, get it from docker and run trivy image. Once it’s finished, try using docker trivy image to scan your image.Put the name of your image after the image command using the following docker trivy command:

docker run ghcr.io/aquasecurity/trivy:latest image DOCKER_IMAGE

Aqua Trivy Scan

Mention your docker image here, it will scan and detect the vulnerabilities.

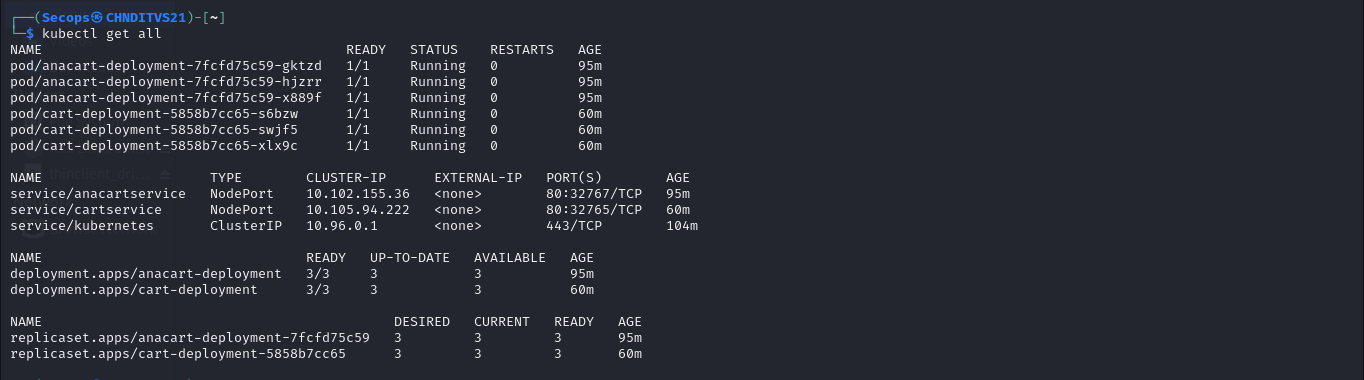

Stage 9 (Kubernetes):

It is the main stage we are now in. Up to this point, everything went according to plan as we built, deployed, and dockerized our image and pushing it to the hub. However, we must host our program in runtime. What is the process? By applying Kubernetes is the way forward.

Make sure you have installed clusters before integrating Kubernetes with Jenkins; it doesn’t matter if they are minikube, kind, or kubeadm. If you’re using a loadbalancer, install kubeadm and construct your master and worker nodes. If you’re using nodeport, install your minikube or kind cluster on your Jenkins slave machine.

Note: You can integrate your Jenkins with your Kubernetes cluster using the kube config file.

Kubernetes Deployment

In the environment stage, provide your kube configuration credentials and add the name of your deployment.yaml file in place of the config file.

Environment

The application will now be operational on your pods after the deployment was successfully created there.You can test by running (kubectl get svc) with the service name. If you used a loadbalancer, you would receive your external IP and be able to access your application

through it.

If you run (minikube service MY-SERVICE-NAME) with minikube, you will receive your IP and port number and be able to access your application through it.

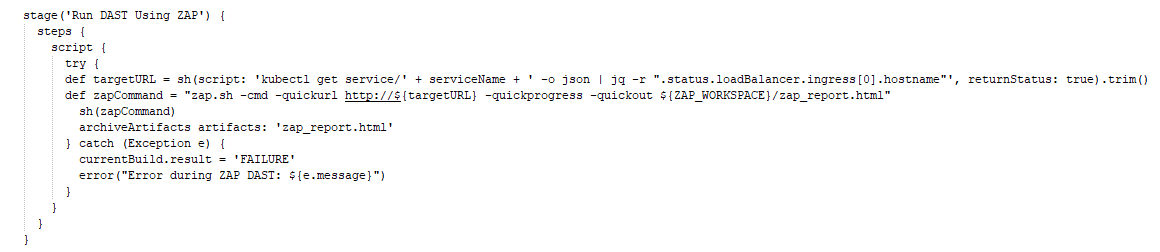

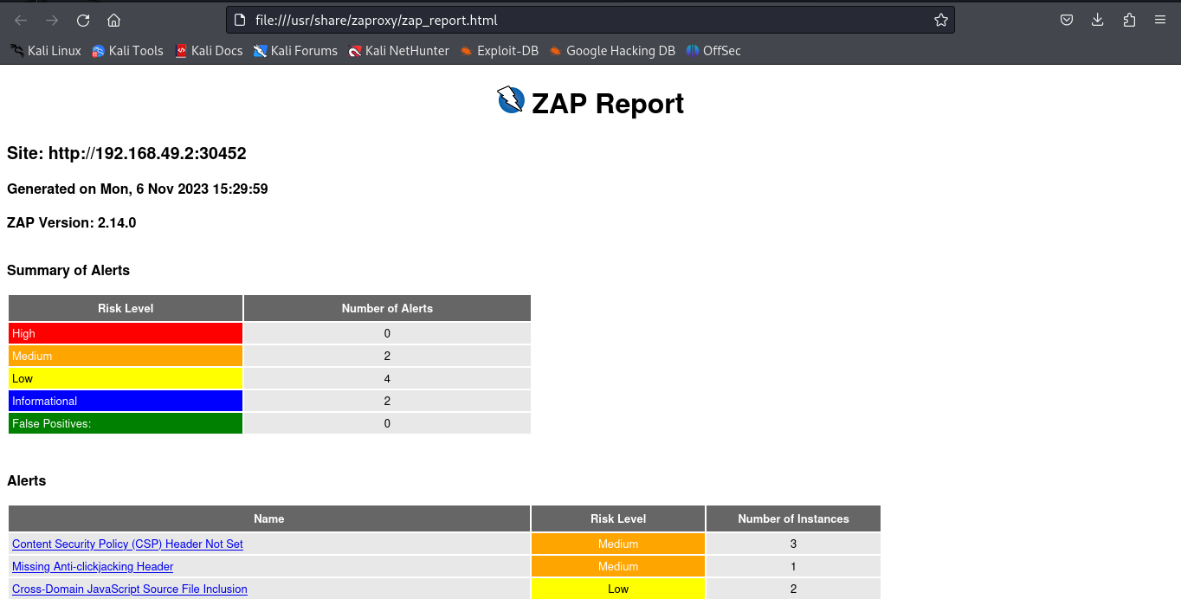

Stage 10 (Zaproxy Testing)

We have already performed SAST Scan and Application Testing; going forward, we will perform DAST, which is intended to assist in detecting security vulnerabilities in web applications throughout the software development and testing stages.

Basically, ZAP testing will involve using that URL to test an application hosted in PROD or DEV. We’ll use a variety of scan methods, including spidering, active, passive, fuzzer, proxy interception, and scripting attacks. However, for now, I’m just doing a basic zap test that generates and provides us with a report.

Make sure that ZAPROXY has installed on your Local or Instance or server systems.

Here I had used minikube, so I had provided the URL direct in Jenkins pipeline.

DAST Scan using Zaproxy

When using Loadbalancer, the zap command will be executed automatically without the need for manual entry, and the IP and port will be generated automatically. Use the below script to detect URL automatically.

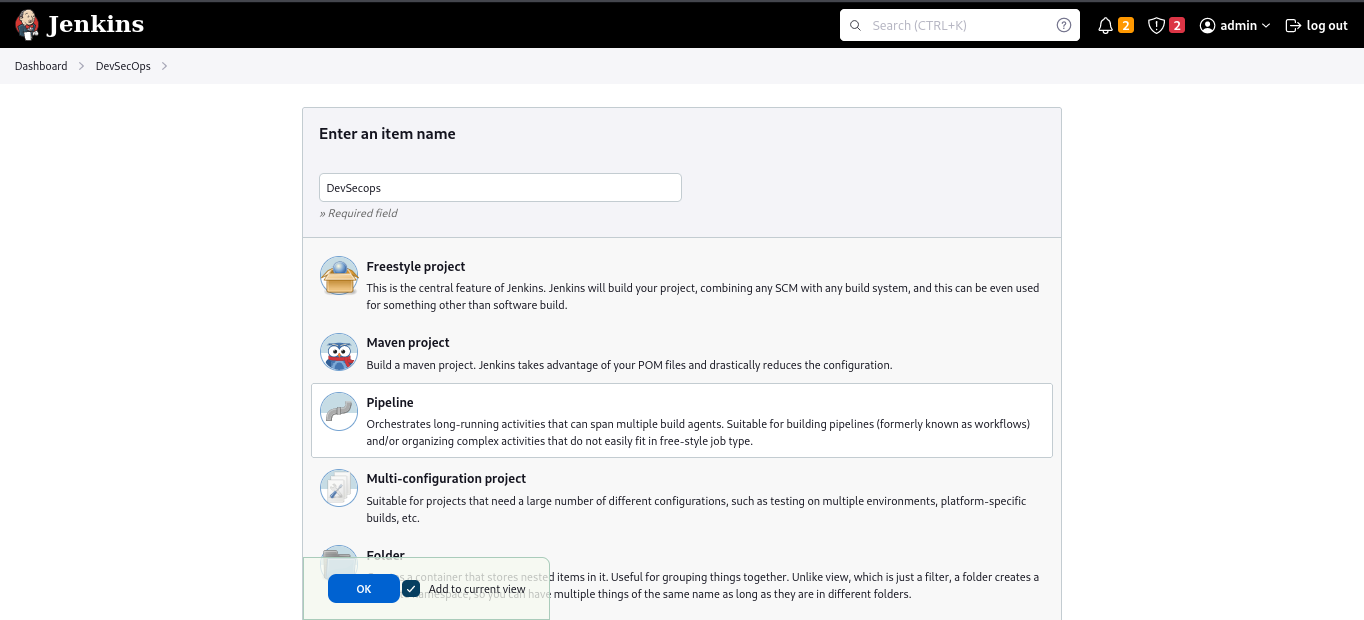

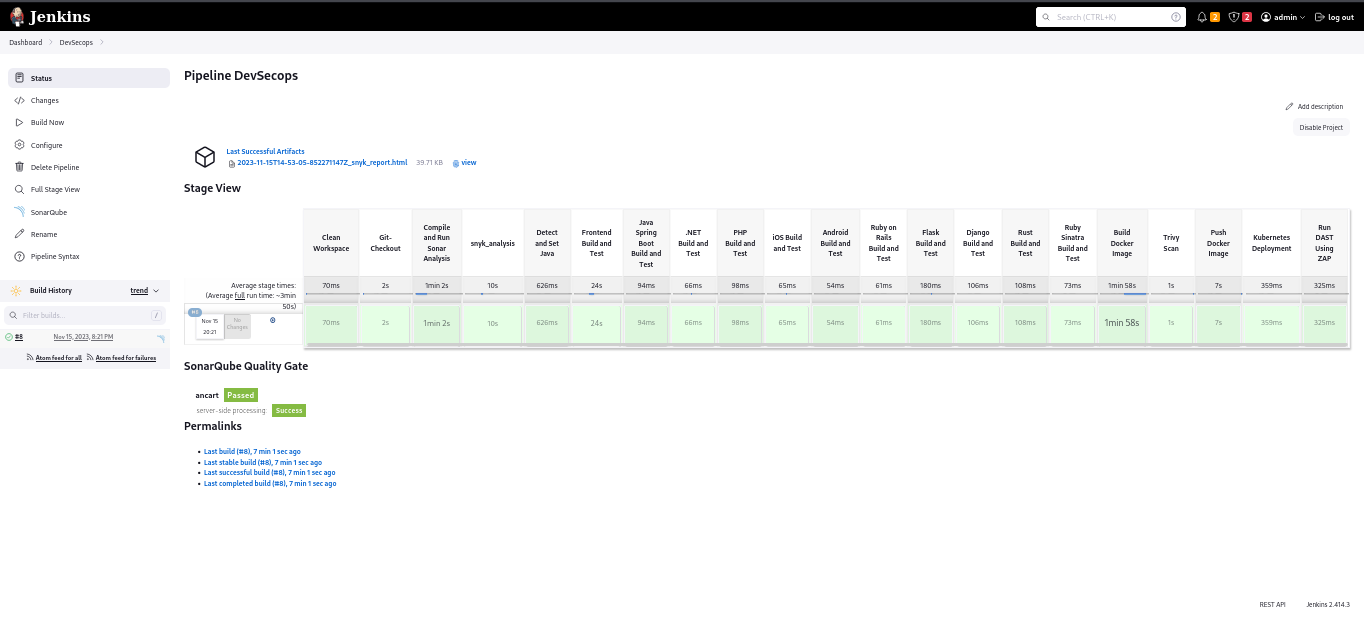

Let’s see practically by running our pipeline script:

Create a pipeline job and give it a name of your choosing, such as Devsecops.

Creating New Pipeline Job

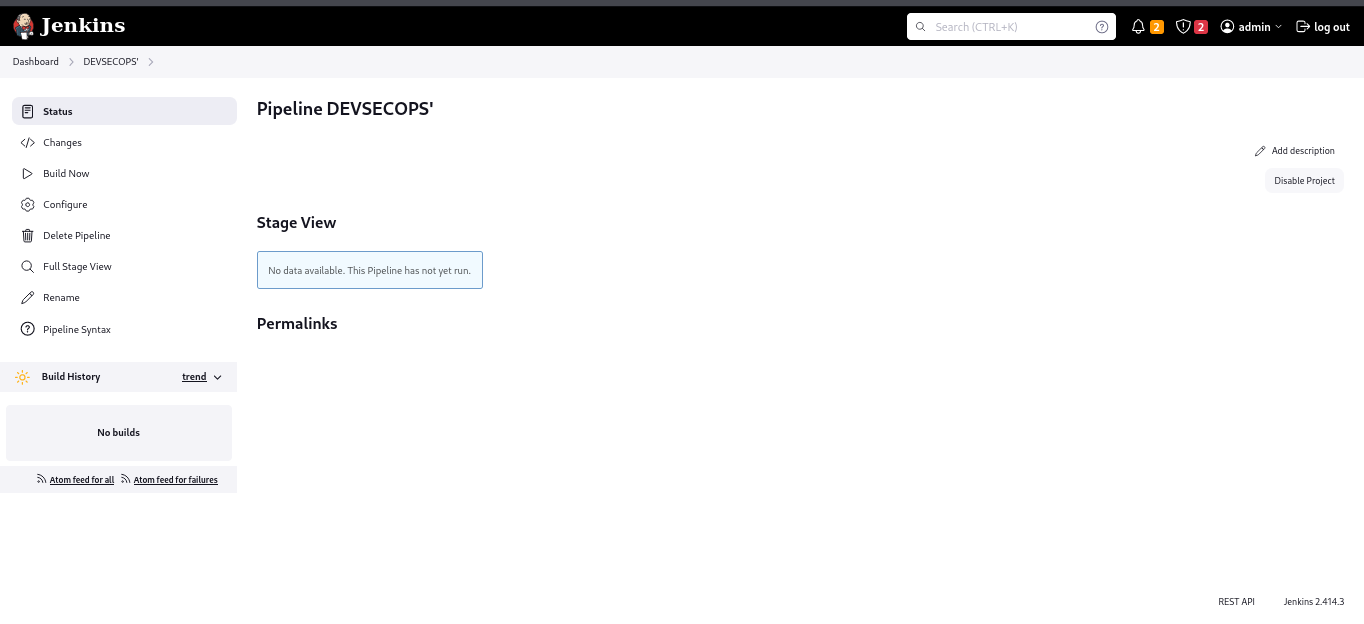

After creating the pipeline job will be look like this

New DevSecOps Job

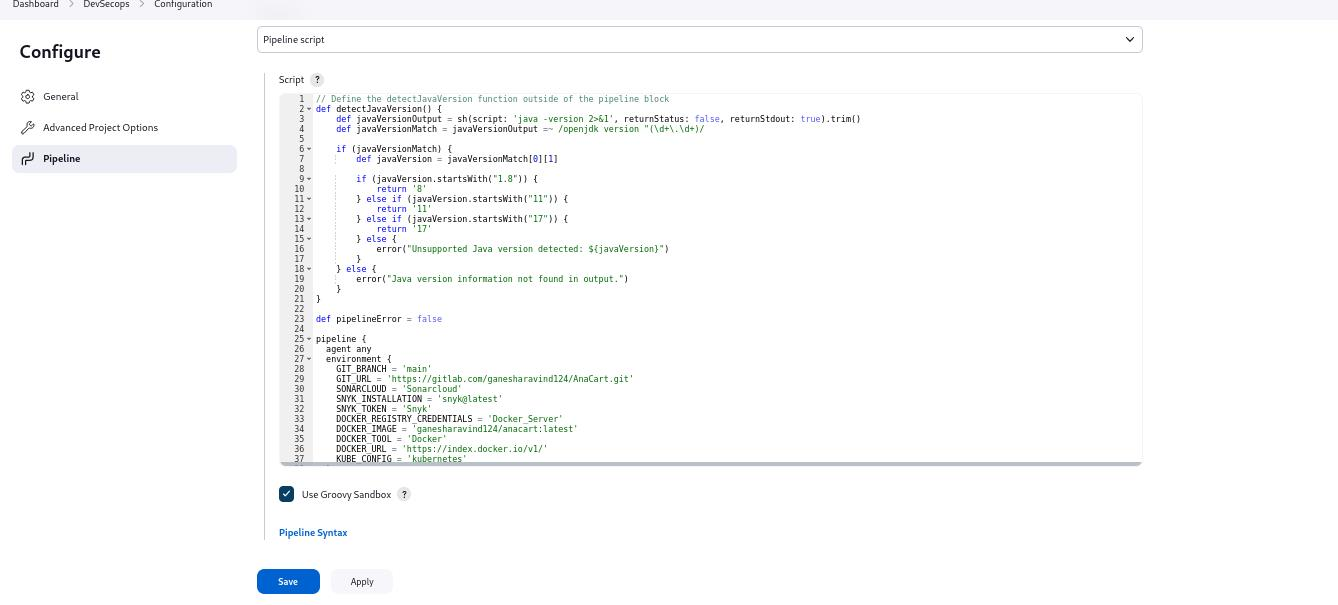

Go to the configuration page in the pipeline job. This page will open. Add your Jenkins pipeline script there.

There are two options available.

1)Script for pipeline: Here, you can easily write your own script.

2)Pipeline from SCM: it will use your SCM repository’s Jenkins file.

Pipeline Configuration

I am choosing Pipeline script from SCM because I am have my Jenkinsfile(groovy script) in my SCM.

And I will show you the another method second method too.

Groovy Script

Check all of the lines, curl braces, and credentials before saving and applying. You should also make sure that the variable names in the environment and the stages are the same, as many people make mistakes in this specific area. Next, click “Apply.” If you run into any problems, an X will appear in that line. If you change “Save,” the page will redirect to the

main site.

After that, click the “Build Now” button.

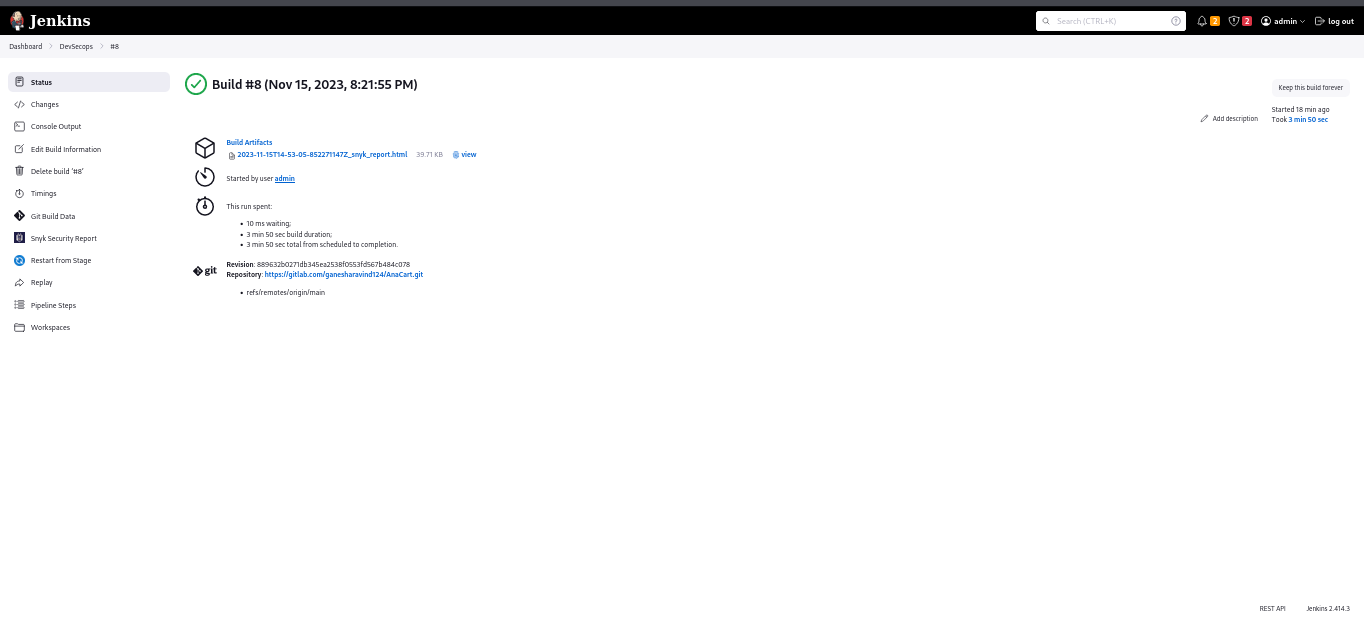

Build History

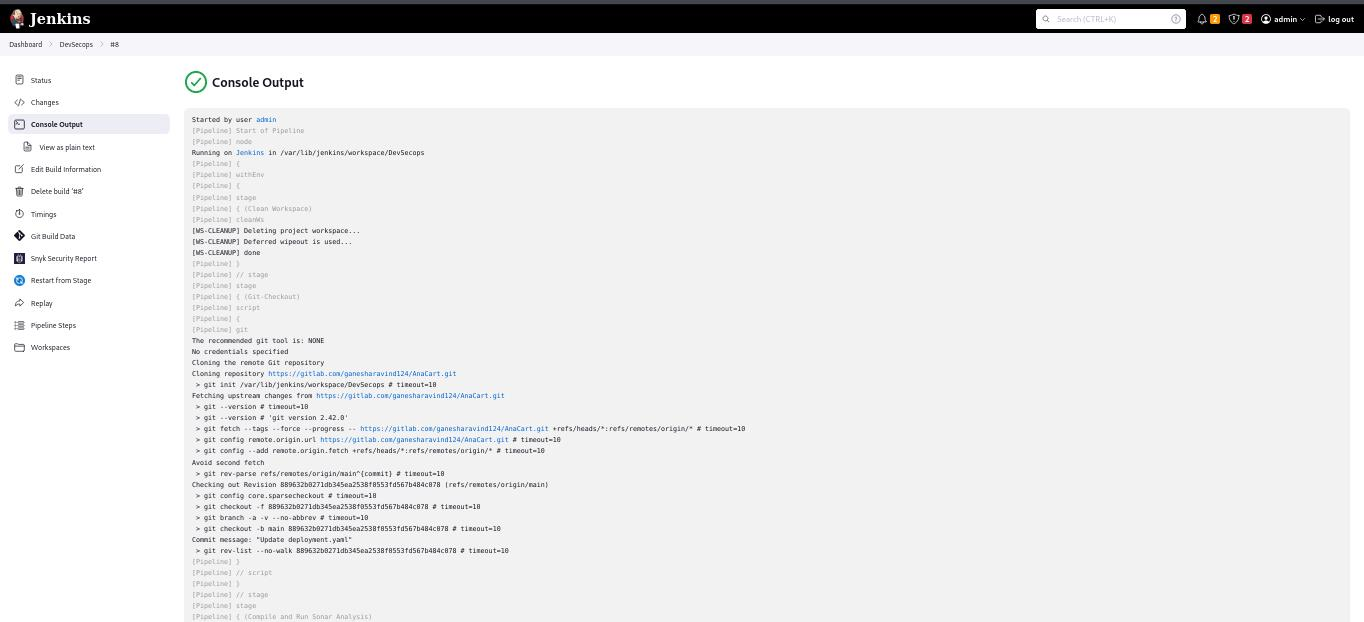

The job will begin to execute.You can check your job outcomes at the console to see if there are any problems.

Console Output

We can see output of our job has succeed. Let’s the output.

Pipeline Build Stages

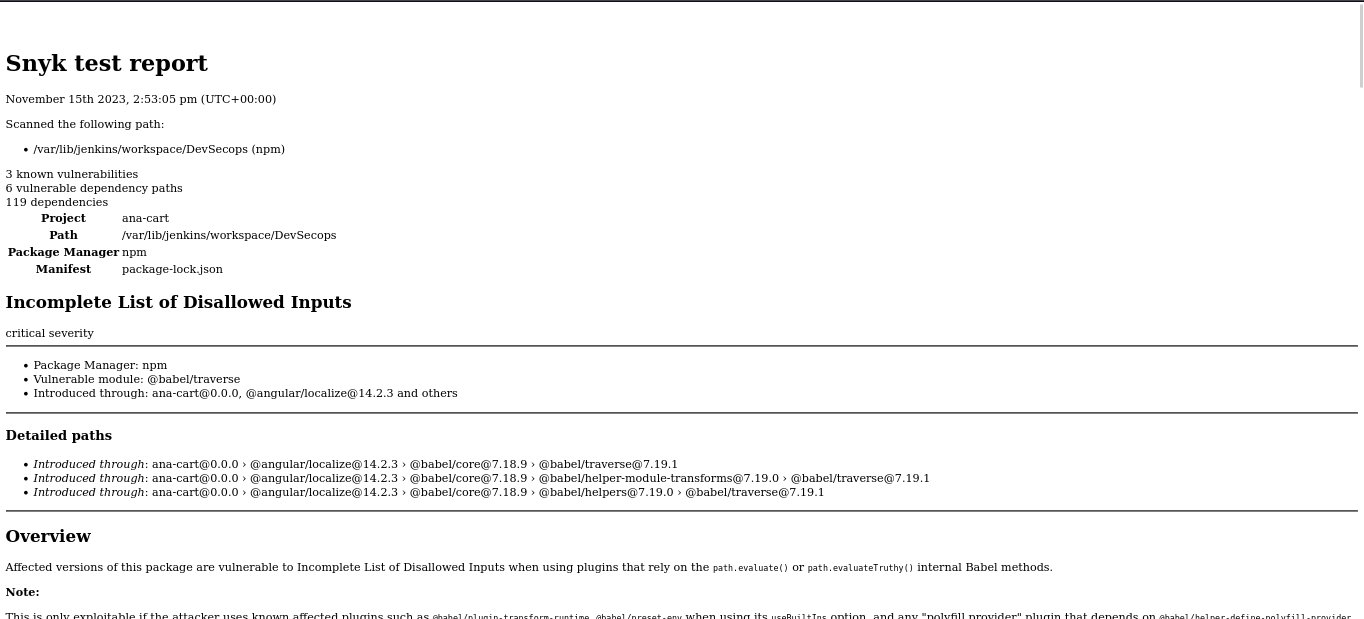

SNYK:

Snyk

Snyk Scan

SONARCLOUD:

SonarCloud

DOCKER HUB:

Docker Hub Repository

TRIVY:

Aqua Trivy Report

KUBERNETES DEPLOYMENT:

ZAPROXY:

Zaproxy Report

WEB has hosted successfully live

Materials Apps Application

If you have queries about the pipeline mentioned above, please get in touch with me on LinkedIn.

#DevSecOps #DevOps #CI/CD #Jenkins #Automation #SoftwareDevelopment #DevOpsTools #Docker #Kubernetes #SecurityTesting #CodeQuality #Programming #TechInnovation #SoftwareEngineering #ITOps #AgileDevelopment #AWS #GCP#ContinuousIntegration #ContinuousDeployment #synk #sonarcloud #gitlab #Zaproxy #Dockerhub #ECR #GCR

Repository:

https://github.com/praveensirvi1212/DevSecOps-project/tree/main

gitlab.com/ganesharavind124/AnaCart

linkedin.com/in/ganesh-aravind-shetty-26976..

https://github.com/dev-sec/ansible-collection-hardening

https://medium.com/@dhananjaytupe748/ci-cd-with-ansible-devsecops-petshop-project-250a8b7a5188

References:

https://medium.com/@sushantkapare1717/complete-ci-cd-pipeline-spring-boot-application-a7500f176f1f

https://www.infoq.com/presentations/tools-devsecops-pipeline/

https://snyk.io/blog/security-vulnerabilities-spring-boot/

https://git.nju.edu.cn/cloudwego/demo-devsecops-spring/-/tree/main?ref_type=heads

https://www.youtube.com/watch?v=QUIXJW_h_K0&pp=ygUVZGV2c2Vjb3BzIHNwcmluZyBib290

https://www.youtube.com/watch?v=g8X5AoqCJHc&t=80s&pp=ygUVZGV2c2Vjb3BzIHNwcmluZyBib290

https://www.youtube.com/watch?v=g-v9AsubOqY&t=957s&pp=ygUVZGV2c2Vjb3BzIHNwcmluZyBib290

https://www.youtube.com/watch?v=g-v9AsubOqY&t=957s&pp=ygUVZGV2c2Vjb3BzIHNwcmluZyBib290

https://www.youtube.com/watch?v=L2x0d3TEhD8&pp=ygUVZGV2c2Vjb3BzIHNwcmluZyBib290

https://www.youtube.com/watch?v=pbGA-B_SCVk&pp=ygUVZGV2c2Vjb3BzIHNwcmluZyBib290

https://www.youtube.com/watch?v=yH9RoOFEKMY&t=330s&pp=ygUVZGV2c2Vjb3BzIHNwcmluZyBib290

https://www.youtube.com/watch?v=XUw7pR026co&pp=ygUVZGV2c2Vjb3BzIHNwcmluZyBib290